The primary task of the Detector Control System (DCS) is to ensure safe and reliable operation of the TPC. It provides remote control and monitoring of all detector equipment in such a way that the TPC can be operated from a single workplace (the ALICE experimental control room at LHC Point 2) through a set of operator interface panels. The system is intended to provide optimal operational conditions so that the data taken by the TPC is of the highest quality.

The primary task of the Detector Control System (DCS) is to ensure safe and reliable operation of the TPC. It provides remote control and monitoring of all detector equipment in such a way that the TPC can be operated from a single workplace (the ALICE experimental control room at LHC Point 2) through a set of operator interface panels. The system is intended to provide optimal operational conditions so that the data taken by the TPC is of the highest quality.

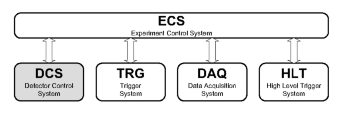

The TPC control system is part of the ALICE DCS. Like the other three ALICE online systems (Data Acquisition system (DAQ), Trigger system (TRG) and High Level Trigger system (HLT)), the ALICE DCS is controlled by the Experiment Control System (ECS), where the ECS is responsible for the synchronization between the four systems.

The hardware architecture of the TPC DCS can be divided in three functional layers. The field layer contains the actual hardware to be controlled (power supplies, FEE ...). The control layer consists of devices for collecting and processing information from the field layer and making it available to the supervisory layer. At the same time the devices of the control layer receive commands from the supervisory layer to be processed and distributed to the field layer. The equipment in the supervisory layer consists of personal computers, providing the user interfaces and connecting to disk servers holding databases for archiving data, etc. The three layers interface mainly through a Local Area Network (LAN).

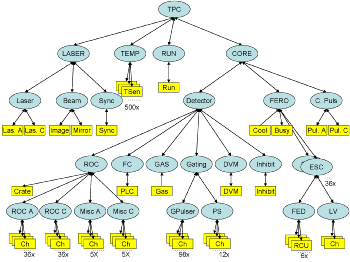

The software architecture is a tree structure that represents the structure of the TPC, its sub-systems and devices. The structure is composed of nodes, each having a single `parent', except for the top node called the `root node'. Nodes may have zero, one or more children. There are two types of nodes, the parent nodes are called Control Units (CU) and the leaf nodes are called Device Units (DU). The control unit controls the sub-tree below it, and the device unit `drives' a device. The behavior and functionality of each control unit is implemented as a finite state machine.

The software architecture is a tree structure that represents the structure of the TPC, its sub-systems and devices. The structure is composed of nodes, each having a single `parent', except for the top node called the `root node'. Nodes may have zero, one or more children. There are two types of nodes, the parent nodes are called Control Units (CU) and the leaf nodes are called Device Units (DU). The control unit controls the sub-tree below it, and the device unit `drives' a device. The behavior and functionality of each control unit is implemented as a finite state machine.

The control system is built using a `controls framework' that is flexible and allows for easy integration of separately developed components. This framework includes drivers for different types of hardware, communication protocols, and configurable components for commonly used applications such as high or low voltage power supplies. The framework also contains many other utilities such as interfaces to the various databases (configuration, archiving), visualization tools, access control, alarm configuration and reporting, etc.

The core software of the control system is the commercial SCADA (Supervisory Controls And Data Acquisition) system PVSSII (Prozess Visualisierungs und Steuerungs System) from the company ETM. PVSSII is an object-oriented process visualization and control system that is used in industry and research as well as by the four LHC experiments. PVSSII is event-driven and has a highly distributed architecture. The SCADA System for the TPC is distributed over twelve Computers.